Deep Learning with Attention-Transform Variations for Interpretable Brain Tumor Classification

DOI:

https://doi.org/10.64105/778mb321Keywords:

Index Terms—Brain tumor classification, interpretable AI, convolutional variational attention transform, ResNet50, explainable AI, MRI imaging.Abstract

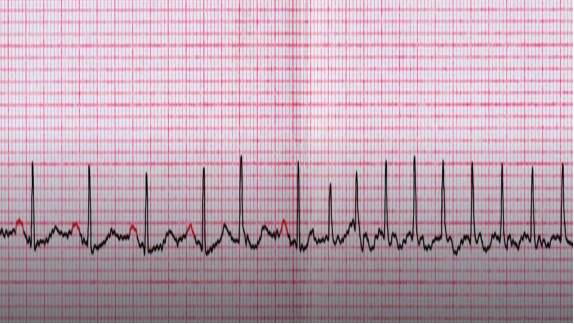

Brain tumors pose a significant global health challenge, necessitating early and accurate diagnosis for effective treatment. Traditional MRI interpretation by radiologists is time-consuming and prone to errors, while deep learning models, though achieving high accuracy, suffer from black-box opacity that erodes clinical trust and adoption. Data privacy regulations like GDPR and HIPAA further complicate centralized training on diverse datasets, limiting model generalization in resource-constrained settings. This paper proposes ConVAT, a novel interpretable deep learning model integrating ResNet50 as the convolutional backbone with variational autoencoders for latent representations and transformer-based attention for spatial-contextual focus. Trained on three diverse MRI datasets—BraTS-Africa (binary classification: glioma vs. other neoplasms, 95 patients), Figshare (multi-class: glioma, meningioma, pituitary tumor, 233 patients), and Brain Tumor Progression (progression stages: early, mid, late, 20 patients with 8798 images)—ConVAT incorporates explainable AI techniques including Smooth Grad-CAM, Score-CAM, and attention visualizations to enhance transparency. Over 50 epochs, the model achieves 98.7%, 99.70% and 99.11 % test accuracy across all datasets, with weighted precision, recall, F1-score, and specificity of 98.7%, 99.70% and 99.11 %, outperforming baselines in generalization and interpretability. Comparative analysis reveals Score-CAM as the most reliable XAI method for clinical validation. By addressing opacity, privacy, and efficiency, ConVAT facilitates trustworthy AI deployment in healthcare, improving diagnostic reliability and radiologist confidence.